| Version 2 (modified by , 7 years ago) ( diff ) |

|---|

Compositing

Compositing is the term used when combining two or more images/video streams into a single frame. This is useful when you want to apply the same effect over several videos from a single stream. There are a few ways of accomplishing this with GStreamer: Either through a 'compositor' element, or by using individualized sinks to transform video streams for you. However, using a compositor element is preferred as it truly combines video streams into a single frame which ideal for streaming over the network.

In the past, a software based compositor was available, but was extremely burdensome on the CPU, which led to very choppy video. However, in the latest gstreamer-imx, a hardware accelerated compositor was added for imx6 based products. For some documentation, please see this page. Please see below for examples on the two ways you can composite video together.

Pseudo Compositing to Local Video Out

When there is a system with multiple video inputs that are then directly displayed on an attached video output, it is best to simply create the composition through coordinates with the hardware sinks.

Instead of using a compositor software element, you can pseudo composite by running multiple video input pipelines together.

Please see the following for an example of taking 2 camera inputs and displaying on one HDMI monitor side by side:

This example uses two separate pipelines from source to sink, relying on the imxg2dvideosink element to draw video frames where instructed.

gst-launch-1.0 \ imxv4l2videosrc device=/dev/video3 ! imxg2dvideosink window-width=960 window-height=544 window-x-coord=0 window-y-coord=0 \ imxv4l2videosrc device=/dev/video5 ! imxg2dvideosink window-width=960 window-height=544 window-x-coord=960 window-y-coord=0

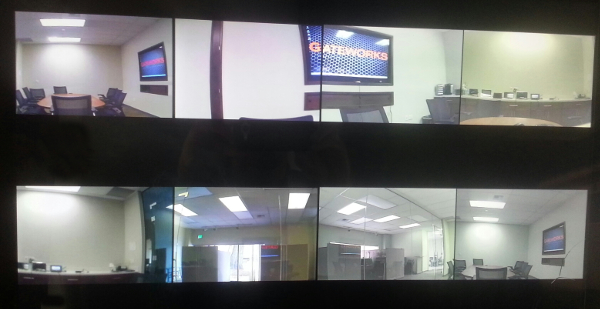

Displaying 8 camera inputs onto an HDMI monitor using AVC8000nano

This assumes a 1920x1080 HDMI monitor is connected to the HDMI output on the SBC.

gst-launch-1.0 \ imxv4l2videosrc device=/dev/video2 ! imxg2dvideosink window-width=490 window-height=490 window-x-coord=0 window-y-coord=0 \ imxv4l2videosrc device=/dev/video3 ! imxg2dvideosink window-width=490 window-height=490 window-x-coord=490 window-y-coord=0 \ imxv4l2videosrc device=/dev/video4 ! imxg2dvideosink window-width=490 window-height=490 window-x-coord=980 window-y-coord=0 \ imxv4l2videosrc device=/dev/video5 ! imxg2dvideosink window-width=490 window-height=490 window-x-coord=1470 window-y-coord=0 \ imxv4l2videosrc device=/dev/video6 ! imxg2dvideosink window-width=490 window-height=490 window-x-coord=0 window-y-coord=540 \ imxv4l2videosrc device=/dev/video7 ! imxg2dvideosink window-width=490 window-height=490 window-x-coord=490 window-y-coord=540 \ imxv4l2videosrc device=/dev/video8 ! imxg2dvideosink window-width=490 window-height=490 window-x-coord=980 window-y-coord=540 \ imxv4l2videosrc device=/dev/video9 ! imxg2dvideosink window-width=490 window-height=490 window-x-coord=1470 window-y-coord=540

Compositor Element for Streaming

Using a compositor element has the benefit of allowing affecting the frame wholly (after frames are combined) instead of working on individual pipelines. For example, video streaming where you want to compress the stream to mpeg4 would benefit. Instead of creating four separate video streams, each doing an encoding, you now stream a single frame with only a single encoding.

gstreamer-imx imxg2dcompositor Hardware Accelerated Single Frame Compositing for Streaming

This new gstreamer-imx compositor uses the same notation the software-based compositor used on this page. However, this is now hardware accelerated by either the GPU or IPU.

A simple example using the videotestsrc plugin is shown below:

gst-launch-1.0 \ imxg2dcompositor name=comp \ sink_0::alpha=1 sink_0::xpos=0 sink_0::ypos=0 \ sink_1::alpha=0.5 sink_1::xpos=320 sink_1::ypos=240 ! \ queue2 ! video/x-raw, width=800, height=600 ! imxipuvideotransform ! imxg2dvideosink \ videotestsrc ! "video/x-raw" ! comp.sink_0 \ videotestsrc ! "video/x-raw" ! comp.sink_1

You can combine live video streaming via the following:

gst-launch-1.0 \ imxg2dcompositor name=c background-color=0x223344 \ sink_0::xpos=0 sink_0::ypos=0 sink_0::width=400 sink_0::height=300 sink_0::fill_color=0x00000000 \ sink_1::xpos=400 sink_1::ypos=0 sink_1::width=400 sink_1::height=300 sink_1::fill_color=0x11111111 \ sink_2::xpos=0 sink_2::ypos=300 sink_2::width=400 sink_2::height=300 sink_2::fill_color=0x22222222 \ sink_3::xpos=400 sink_3::ypos=300 sink_3::width=400 sink_3::height=300 sink_3::fill_color=0x33333333 \ sink_4::xpos=200 sink_4::ypos=150 sink_4::width=400 sink_4::height=300 sink_4::fill_color=0x44444444 sink_4::alpha=0.5 ! \ queue2 ! video/x-raw, width=800, height=600 ! imxg2dvideosink \ imxv4l2videosrc device=/dev/video3 ! queue2 ! c.sink_0 \ imxv4l2videosrc device=/dev/video5 ! queue2 ! c.sink_1 \ videotestsrc pattern=0 ! queue2 ! c.sink_2 \ videotestsrc pattern=18 ! queue2 ! c.sink_3 \ videotestsrc pattern=1 ! queue2 ! c.sink_4

An alternate way of writing the above:

gst-launch-1.0 \ imxv4l2videosrc device=/dev/video3 ! queue2 ! c.sink_0 \ imxv4l2videosrc device=/dev/video5 ! queue2 ! c.sink_1 \ videotestsrc pattern=0 ! queue2 ! c.sink_2 \ videotestsrc pattern=18 ! queue2 ! c.sink_3 \ videotestsrc pattern=1 ! queue2 ! c.sink_4 \ imxg2dcompositor name=c background-color=0x223344 \ sink_0::xpos=0 sink_0::ypos=0 sink_0::width=400 sink_0::height=300 sink_0::fill_color=0x00000000 \ sink_1::xpos=400 sink_1::ypos=0 sink_1::width=400 sink_1::height=300 sink_1::fill_color=0x11111111 \ sink_2::xpos=0 sink_2::ypos=300 sink_2::width=400 sink_2::height=300 sink_2::fill_color=0x22222222 \ sink_3::xpos=400 sink_3::ypos=300 sink_3::width=400 sink_3::height=300 sink_3::fill_color=0x33333333 \ sink_4::xpos=200 sink_4::ypos=150 sink_4::width=400 sink_4::height=300 sink_4::fill_color=0x44444444 sink_4::alpha=0.5 ! \ queue2 ! video/x-raw, width=800, height=600 ! imxg2dvideosink

This above examples showcases several ideas: resizing video, filling background color if video doesn't fit the full parameters, combines a total of five (5) pipelines, and places one of them in the middle of the screen with half it's transparency. Please note that you can use the imxipucompositor, but because the IPU is very pick with it's input type (width and height), it is generally not recommended for video composition.

The below image (annotated) is how the above pipeline would look:

Notes:

- The colors

0x00000000and0x11111111are used as background fill colors for that frame. Note that thesink_n::fill_colorproperty is a 32bit ABGR value - The total size is 800x600, with each of the five frames being 400x300 (outlined in Red)

- The top-left corner is coordinate

0, 0 - You can rotate a single inner video frame by adding

sink_n::rotation=1, the video stream will be fit for the new aspect ratio and background will be colored with thesink_n::fill_colorproperty - Borders can be added to frames via the

sink_n::left-margin,sink_n::right-margin,sink_n::top-margin, andsink_n::bottom-marginproperties - For a complete list of properties to the compositor, please see this section of the

gstreamer-imxREADME.

If you want to stream this via rtsp, you can either use the gst-variable-rtsp-server application, which is provided by default on our Yocto 1.8 BSP or use the udpsink/src elements. The following is an example of using the gst-variable-rtsp-server application.

# Streaming at birate of 10mbit/s gst-variable-rtsp-server -u \ "imxv4l2videosrc device=/dev/video3 ! queue2 ! c.sink_0 \ imxv4l2videosrc device=/dev/video5 ! queue2 ! c.sink_1 \ videotestsrc pattern=0 ! queue2 ! c.sink_2 \ videotestsrc pattern=18 ! queue2 ! c.sink_3 \ videotestsrc pattern=1 ! queue2 ! c.sink_4 \ imxg2dcompositor name=c background-color=0x223344 \ sink_0::xpos=0 sink_0::ypos=0 sink_0::width=400 sink_0::height=300 sink_0::fill_color=0x00000000 \ sink_1::xpos=400 sink_1::ypos=0 sink_1::width=400 sink_1::height=300 sink_1::fill_color=0x11111111 \ sink_2::xpos=0 sink_2::ypos=300 sink_2::width=400 sink_2::height=300 sink_2::fill_color=0x22222222 \ sink_3::xpos=400 sink_3::ypos=300 sink_3::width=400 sink_3::height=300 sink_3::fill_color=0x33333333 \ sink_4::xpos=200 sink_4::ypos=150 sink_4::width=400 sink_4::height=300 sink_4::fill_color=0x44444444 sink_4::alpha=0.5 ! \ queue2 ! video/x-raw, width=800, height=600 ! imxipuvideotransform ! imxvpuenc_h264 bitrate=10000 ! rtph264pay name=pay0 pt=96"

An example of the pipeline on the receiving side that then displays to an HDMI monitor:

gst-launch-1.0 rtspsrc location=rtsp://172.24.10.210:9099/stream latency=10 ! decodebin ! autovideosink

For other methods of network streaming, please view our streaming page.

Compositor Example with 8 Video Cameras

- First, start the server side code on the SBC with the cameras connected

gst-variable-rtsp-server -u \ "imxv4l2videosrc device=/dev/video2 queue-size=55 ! queue2 ! c.sink_0 \ imxv4l2videosrc device=/dev/video3 queue-size=55 ! queue2 ! c.sink_1 \ imxv4l2videosrc device=/dev/video4 queue-size=55 ! queue2 ! c.sink_2 \ imxv4l2videosrc device=/dev/video5 queue-size=55 ! queue2 ! c.sink_3 \ imxv4l2videosrc device=/dev/video6 queue-size=55 ! queue2 ! c.sink_4 \ imxv4l2videosrc device=/dev/video7 queue-size=55 ! queue2 ! c.sink_5 \ imxv4l2videosrc device=/dev/video8 queue-size=55 ! queue2 ! c.sink_6 \ imxv4l2videosrc device=/dev/video9 queue-size=55 ! queue2 ! c.sink_7 \ imxg2dcompositor name=c background-color=0xffffff \ sink_0::xpos=0 sink_0::ypos=0 sink_0::width=320 sink_0::height=360 sink_0::fill_color=0x00000000 \ sink_1::xpos=320 sink_1::ypos=0 sink_1::width=320 sink_1::height=360 sink_1::fill_color=0x00000000 \ sink_2::xpos=640 sink_2::ypos=0 sink_2::width=320 sink_2::height=360 sink_2::fill_color=0x00000000 \ sink_3::xpos=960 sink_3::ypos=0 sink_3::width=320 sink_3::height=360 sink_3::fill_color=0x00000000 \ sink_4::xpos=0 sink_4::ypos=360 sink_4::width=320 sink_4::height=360 sink_4::fill_color=0x00000000 \ sink_5::xpos=320 sink_5::ypos=360 sink_5::width=320 sink_5::height=360 sink_5::fill_color=0x00000000 \ sink_6::xpos=640 sink_6::ypos=360 sink_6::width=320 sink_6::height=360 sink_6::fill_color=0x00000000 \ sink_7::xpos=960 sink_7::ypos=360 sink_7::width=320 sink_7::height=360 sink_7::fill_color=0x00000000 \ ! queue2 ! video/x-raw, width=1280, height=720 ! imxipuvideotransform \ ! imxvpuenc_h264 bitrate=20000 ! rtph264pay name=pay0 pt=96"

- Then, start the receiving code on the other SBC, pointing to the IP of the server

gst-launch-1.0 --gst-debug=3 rtspsrc location=rtsp://172.24.10.210:9099/stream latency=100 ! queue2 ! decodebin ! autovideosink

Compositing with limited CPU, low memory bandwidth

Board performance can be measured with:

/unit_tests/mmdc2

This is included in our Yocto pre-built image.

Boards with less processing power or lower memory bandwidth may only function using lower FPS:

gst-launch-1.0 \ imxv4l2videosrc device=/dev/video2 fps-n=15 ! imxg2dvideosink window-width=490 window-height=490 window-x-coord=0 window-y-coord=0 \ imxv4l2videosrc device=/dev/video3 fps-n=15 ! imxg2dvideosink window-width=490 window-height=490 window-x-coord=490 window-y-coord=0 \ imxv4l2videosrc device=/dev/video4 fps-n=15 ! imxg2dvideosink window-width=490 window-height=490 window-x-coord=980 window-y-coord=0 \ imxv4l2videosrc device=/dev/video5 fps-n=15 ! imxg2dvideosink window-width=490 window-height=490 window-x-coord=1470 window-y-coord=0 \ imxv4l2videosrc device=/dev/video6 fps-n=15 ! imxg2dvideosink window-width=490 window-height=490 window-x-coord=0 window-y-coord=540 \ imxv4l2videosrc device=/dev/video7 fps-n=15 ! imxg2dvideosink window-width=490 window-height=490 window-x-coord=490 window-y-coord=540 \ imxv4l2videosrc device=/dev/video8 fps-n=15 ! imxg2dvideosink window-width=490 window-height=490 window-x-coord=980 window-y-coord=540 \ imxv4l2videosrc device=/dev/video1 fps-n=15 ! imxg2dvideosink window-width=490 window-height=490 window-x-coord=1470 window-y-coord=540

You may also reduce bus load by reducing the output resolution:

gst-launch-1.0 -e -vv \ imxg2dcompositor name=comp \ sink_1::xpos=0 sink_1::ypos=0 \ sink_2::xpos=490 sink_2::ypos=0 \ sink_3::xpos=980 sink_3::ypos=0 \ sink_4::xpos=1470 sink_4::ypos=0 \ sink_5::xpos=0 sink_5::ypos=540 \ sink_6::xpos=490 sink_6::ypos=540 \ sink_7::xpos=980 sink_7::ypos=540 \ sink_8::xpos=1470 sink_8::ypos=540 ! \ queue2 ! video/x-raw, width=1940, height=1080 ! imxipuvideotransform ! imxipuvideosink \ imxv4l2videosrc device=/dev/video1 fps-n=12 ! queue2 ! imxipuvideotransform qos=false ! video/x-raw, width=340, height=240 ! comp.sink_1 \ imxv4l2videosrc device=/dev/video2 fps-n=12 ! queue2 ! imxipuvideotransform qos=false ! video/x-raw, width=340, height=240 ! comp.sink_2 \ imxv4l2videosrc device=/dev/video3 fps-n=12 ! queue2 ! imxipuvideotransform qos=false ! video/x-raw, width=340, height=240 ! comp.sink_3 \ imxv4l2videosrc device=/dev/video4 fps-n=12 ! queue2 ! imxipuvideotransform qos=false ! video/x-raw, width=340, height=240 ! comp.sink_4 \ imxv4l2videosrc device=/dev/video5 fps-n=12 ! queue2 ! imxipuvideotransform qos=false ! video/x-raw, width=340, height=240 ! comp.sink_5 \ imxv4l2videosrc device=/dev/video6 fps-n=12 ! queue2 ! imxipuvideotransform qos=false ! video/x-raw, width=340, height=240 ! comp.sink_6 \ imxv4l2videosrc device=/dev/video7 fps-n=12 ! queue2 ! imxipuvideotransform qos=false ! video/x-raw, width=340, height=240 ! comp.sink_7 \ imxv4l2videosrc device=/dev/video8 fps-n=12 ! queue2 ! imxipuvideotransform qos=false ! video/x-raw, width=340, height=240 ! comp.sink_8

These command strings were verified using GW5100 and Yocto 2.3.

Compositor Software Accelerated - Not recommended

This compositor element is a software based compositor and is extremely painful to use. It is very slow at copying buffers around and thus causes quite a bit of latency and causes video stuttering. Please consider using the gstreamer-imx compositor instead. However, as an example, here is a possible pipeline to use it:

(Not recommended, use gstreamer-imx)

gst-launch-1.0 \ compositor name=comp \ sink_0::alpha=1 sink_0::xpos=0 sink_0::ypos=0 \ sink_1::alpha=0.5 sink_1::xpos=320 sink_1::ypos=240 ! \ videoconvert ! imxg2dvideosink \ videotestsrc ! "video/x-raw,format=AYUV,width=320,height=240,framerate=(fraction)30/1" ! queue2 ! comp.sink_0 \ videotestsrc ! "video/x-raw,format=AYUV,width=320,height=240,framerate=(fraction)30/1" ! queue2 ! comp.sink_1

Attachments (1)

- composite_example.png (419.6 KB ) - added by 8 years ago.

Download all attachments as: .zip