| Version 10 (modified by , 5 weeks ago) ( diff ) |

|---|

Hailo AI - Edge AI Processors

Hailo is a hardware manufacturer that produces high-performance, low-power AI accelerators and vision processors uniquely designed to accelerate embedded deep learning applications on edge devices.

They typically come in a M.2 or Mini-PCIe form factor that can be plugged into a Gateworks Single Board Computer (SBC).

Please note that the Gateworks Venice SBCs have 1x lane of PCIe to each Mini-PCIe slot. Thus any Hailo product that can use 4x lanes will only utilize 1x lane and performance will adjust accordingly.

Note, this page is also related to the following Gateworks Wiki pages:

Quick Start Guide

Required Equipment:

- Gateworks Venice SBC with latest software (testing was done with Ubuntu Jammy and 6.6.8 kernel)

- Hailo AI Hailo-8 M.2 module here

- Gateworks GW16148 Mini-PCIe to M.2 M-Key adapter

- Hailo AI Developer Account (free) https://hailo.ai/developer-zone/

Steps:

- Download Hailo AI Software for Gateworks SBC

- Login to Hailo 'Developer Zone' and go to software downloads page: https://hailo.ai/developer-zone/software-downloads/

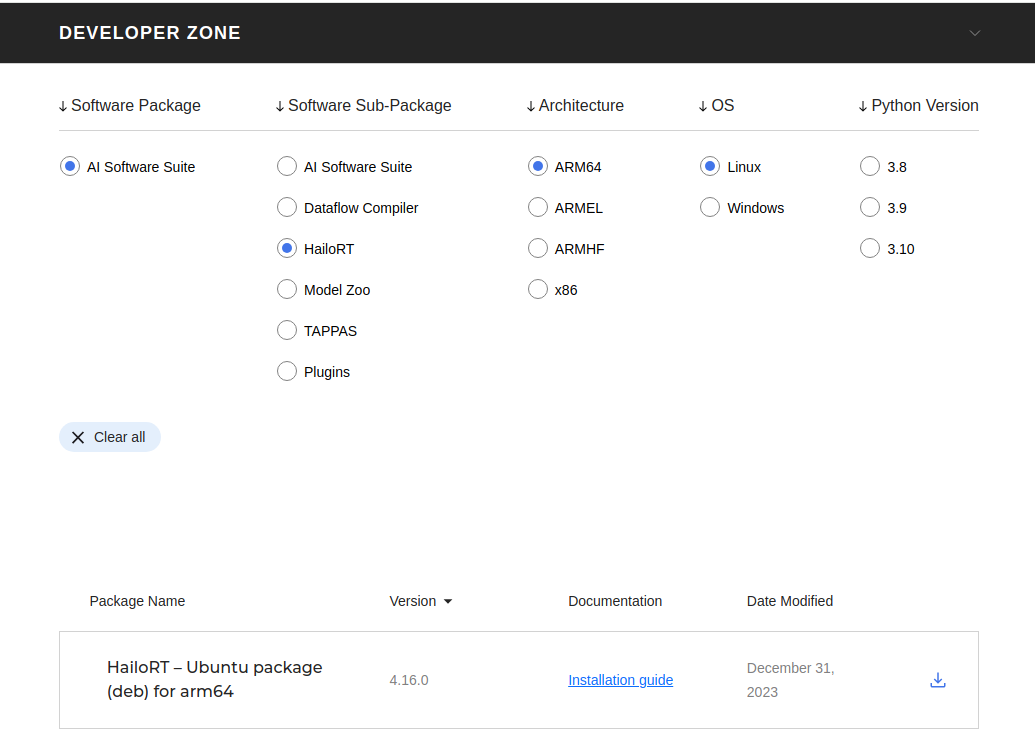

- When selecting a product for downloads, select 'Accelerators' and Sub-package the 'HailoRT' radio button should be checked, OS is Linux. As shown in screenshot below:

- Download the following files:

- HailoRT – PCIe driver Ubuntu package (deb) * not specific to arm64

- HailoRT – Ubuntu package (deb) for arm64

- Hailo Integration Tool – Ubuntu package (deb) for arm64 (not mandatory)

- Boot the Gateworks SBC with access to the serial console over JTAG

- Come a Ubuntu command prompt:

root@jammy-venice:~#

- Choose a way to transfer these .deb files from the PC to the Gateworks SBC (use either wget from a http server, use SCP, or use a USB flash drive, etc).

- Install pre-requisites

sudo apt update sudo apt install build-essential

- Install PCIe driver first

root@jammy-venice:~# sudo dpkg -i hailort-pcie-driver_4.16.0_all.deb (Reading database ... 34758 files and directories currently installed.) Preparing to unpack hailort-pcie-driver_4.16.0_all.deb ... Could not test for SecureBoot, assuming SecureBoot is disabled on this machine. Unpacking hailort-pcie-driver (4.16.0) over (4.16.0) ... Setting up hailort-pcie-driver (4.16.0) ... WARNING: apt does not have a stable CLI interface. Use with caution in scripts. build-essential/jammy,now 12.9ubuntu3 arm64 [installed] Do you wish to use DKMS? [Y/n]: n Please reboot your computer for the installation to take effect. root@jammy-venice:~#

- Install HailoRT tools second:

root@jammy-venice:~# sudo dpkg -i hailort_4.16.0_arm64.deb Selecting previously unselected package hailort. (Reading database ... 28728 files and directories currently installed.) Preparing to unpack hailort_4.16.0_arm64.deb ... Unpacking hailort (4.16.0) ... Setting up hailort (4.16.0) ... Do you wish to activate hailort service? (required for most pyHailoRT use cases) [y/N]: Stopping hailort.service root@jammy-venice:~#

- Reboot system

- Use the lspci command to verify the Hailo card is seen on the PCIe bus:

root@jammy-venice:~# lspci 00:00.0 PCI bridge: Synopsys, Inc. DWC_usb3 / PCIe bridge (rev 01) 01:00.0 PCI bridge: Pericom Semiconductor PI7C9X2G608GP PCIe2 6-Port/8-Lane Packet Switch 02:01.0 PCI bridge: Pericom Semiconductor PI7C9X2G608GP PCIe2 6-Port/8-Lane Packet Switch 02:02.0 PCI bridge: Pericom Semiconductor PI7C9X2G608GP PCIe2 6-Port/8-Lane Packet Switch 02:03.0 PCI bridge: Pericom Semiconductor PI7C9X2G608GP PCIe2 6-Port/8-Lane Packet Switch 02:04.0 PCI bridge: Pericom Semiconductor PI7C9X2G608GP PCIe2 6-Port/8-Lane Packet Switch 42:00.0 Co-processor: Hailo Technologies Ltd. Hailo-8 AI Processor (rev 01) c0:00.0 Ethernet controller: Marvell Technology Group Ltd. 88E8057 PCI-E Gigabit Ethernet Controller root@jammy-venice:~#

- Verify Hailo PCIe device is seen with the hailo rt cli software tool:

root@jammy-venice:~# hailortcli scan Hailo Devices: [-] Device: 0000:03:00.0

Usage

Run HEF File: (pre-compiled HEF (Hailo Executable Format) binary file)

- Note HEF files can be downloaded here:

root@jammy-venice:~# wget https://hailo-model-zoo.s3.eu-west-2.amazonaws.com/ModelZoo/Compiled/v2.10.0/hailo8/efficientnet_l.hef --2024-03-20 23:18:31-- https://hailo-model-zoo.s3.eu-west-2.amazonaws.com/ModelZoo/Compiled/v2.10.0/hailo8/efficientnet_l.hef Resolving hailo-model-zoo.s3.eu-west-2.amazonaws.com (hailo-model-zoo.s3.eu-west-2.amazonaws.com)... 52.95.191.58, 52.95.144.10, 52.95.148.10, ... Connecting to hailo-model-zoo.s3.eu-west-2.amazonaws.com (hailo-model-zoo.s3.eu-west-2.amazonaws.com)|52.95.191.58|:443... connected. HTTP request sent, awaiting response... 200 OK Length: 11815410 (11M) [binary/octet-stream] Saving to: ‘efficientnet_l.hef’ efficientnet_l.hef 100%[==============================================================>] 11.27M 5.68MB/s in 2.0s 2024-03-20 23:18:34 (5.68 MB/s) - ‘efficientnet_l.hef’ saved [11815410/11815410]

root@jammy-venice:~# hailortcli run efficientnet_l.hef

Running streaming inference (efficientnet_l.hef):

Transform data: true

Type: auto

Quantized: true

Network efficientnet_l/efficientnet_l: 100% | 774 | FPS: 154.57 | ETA: 00:00:00

> Inference result:

Network group: efficientnet_l

Frames count: 774

FPS: 154.58

Send Rate: 333.89 Mbit/s

Recv Rate: 1.25 Mbit/s

root@jammy-venice:~#

Venice Performance Test: (requires Hailo Integration tool, SW package from Hailo SW Developer Zone downloads)

root@jammy-venice:~# hailo-integration-tool -g test.json #generate test.json file which tells which tests to run, edit the file to set tests to true and false, etc

root@jammy-venice:~# hailo-integration-tool ./test.json

Performance test started

0000:03:00.0 starting Performance Test

0000:03:00.0 - Tested Network: basic_model, Inference Mode: hw_only, Duration: 60 sec, Measured FPS: 1328

0000:03:00.0 - Tested Network: heavy_load, Inference Mode: hw_only, Duration: 60 sec, Measured FPS: 6

0000:03:00.0 - Tested Network: basic_model, Inference Mode: streaming, Duration: 60 sec, Measured FPS: 1328

0000:03:00.0 - Tested Network: heavy_load, Inference Mode: streaming, Duration: 60 sec, Measured FPS: 6

Performance test passed

JSON summary is ready: integration_tool_reports/2023-Apr-04_23-26-02/summary.json

HTML summary is ready: integration_tool_reports/2023-Apr-04_23-26-02/summary.html

root@jammy-venice:~# cat integration_tool_reports/2023-Apr-04_23-26-02/summary.json

{

"Integration Tool": {

"Generic info": {

"Date of execution": "04-04-2023 23-21-58",

"Tested modules": [

{

"PCIe location": "0000:03:00.0",

"Product name": "HAILO-8 AI ACC M.2 M KEY MODULE EXT TEMP",

"Serial number": "HLLWM2A220500021"

}

],

"Tests summary": [

{

"PCIe location": "0000:03:00.0",

"Pass / Fail": "Pass",

"Test duration [s]": 244.0,

"Test name": "Performance test"

}

],

"User configuration comment": "Insert user-specific comment here",

"Version tool": "1.13.0",

"continue_to_next_test_upon_failure": false

},

"Tests": [

{

"Performance test": {

"Detected PCIe configuration": {

"#Lanes": 2,

"Generation": 1

},

"Device ID": "0000:03:00.0",

"Fail description": "",

"Pass / Fail": "Pass",

"Results": [

{

"Infer Mode": "hw_only",

"Measured FPS": 1328,

"Reference0 FPS": -1,

"Status": "passed",

"Tested NN": "basic_model"

},

{

"Infer Mode": "hw_only",

"Measured FPS": 6,

"Reference0 FPS": -1,

"Status": "passed",

"Tested NN": "heavy_load"

},

{

"Infer Mode": "streaming",

"Measured FPS": 1328,

"Reference0 FPS": -1,

"Status": "passed",

"Tested NN": "basic_model"

},

{

"Infer Mode": "streaming",

"Measured FPS": 6,

"Reference0 FPS": -1,

"Status": "passed",

"Tested NN": "heavy_load"

}

]

}

}

]

}

}

Hailo Examples

Hailo has some pre-built examples.

Use the following steps:

- Checkout example code:

git clone https://github.com/hailo-ai/Hailo-Application-Code-Examples.git

Static Image Hailo Example

YOLO stands for You only look once.

COCO is Common Objects in Context.

The coco text file is needed to label the objects in the image.

This is a pre-done example from the Hailo Github located here: https://github.com/hailo-ai/Hailo-Application-Code-Examples/tree/main/runtime/python/yolo_general_inference

- Run example which will take an input picture and then label any objects detected in the picture.

Code example:

gateworks@jammy-venice:~/apps/apps/Hailo-Application-Code-Examples/runtime/python/yolo_general_inference$ python3 ./yolo_inference.py --labels ./coco2017.txt ./yolov7.hef ./bike.jpg yolo_v7

Warning! Unable to query CPU for the list of supported features.

DEBUG:tensorflow:Falling back to TensorFlow client; we recommended you install the Cloud TPU client directly with pip install cloud-tpu-client.

i | Input layer: yolov7/input_layer1 (640, 640, 3)

i | Output layer: yolov5_nms_postprocess (80, 5, 80)

Labels detected in image0:

person: 89.02%

bicycle: 92.16%

gateworks@jammy-venice:~/apps/apps/Hailo-Application-Code-Examples/runtime/python/yolo_general_inference$

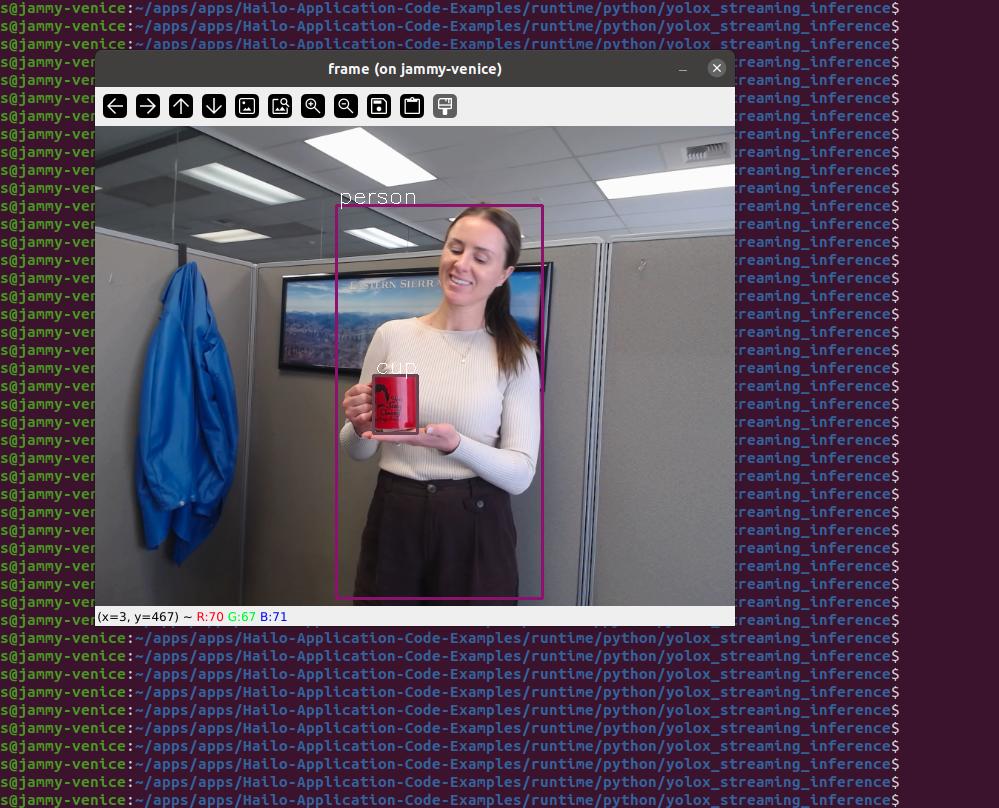

Hailo Webcam Example

This is a pre-done example from the Hailo Github located here: https://github.com/hailo-ai/Hailo-Application-Code-Examples/tree/main/runtime/python/yolox_streaming_inference

Connect a known working webcam to the Venice SBC. The example used was a Logitech C930.

Be sure it shows up using v4l2-ctl command:

gateworks@jammy-venice:~/apps/apps/Hailo-Application-Code-Examples/runtime/python/yolox_streaming_inference$ v4l2-ctl --list-devices Logitech Webcam C930e (usb-ci_hdrc.1-1.1): /dev/video0

Update permissions of the webcam device on Venice SBC for all users just for the demo:

chmod 777 /dev/video0

Add a user to the Venice SBC other than root, as X11 doesn't work well as root

sudo adduser frank

SSH into the Venice SBC (headless) with a Linux desktop machine using X11 forwarding. This will allow a GUI window to popup on the Linux desktop machine because the Venice SBC has no monitor:

ssh frank@192.168.1.23-X #change IP to Venice SBC

From the ssh session on the desktop, run the example code like below:

gateworks@jammy-venice:~/apps/apps/Hailo-Application-Code-Examples/runtime/python/yolox_streaming_inference$ ./yolox_stream_inference.py ['0000:42:00.0'] gateworks@jammy-venice:~/apps/apps/Hailo-Application-Code-Examples/runtime/python/yolox_streaming_inference$

Screenshot of video detection with webcam (x11 window pops up on Linux desktop)

Hailo Tappas

Hailo TAPPAS - Optimized Execution of Video-Processing Pipelines TAPPAS is Hailo's set of full application examples, implementing pipeline elements and pre-trained AI tasks.

Read more here https://github.com/hailo-ai/tappas?tab=readme-ov-file#hailo-tappas---optimized-execution-of-video-processing-pipelines

Attachments (6)

- hailort.png (49.5 KB ) - added by 7 weeks ago.

- hailo_hailo8_m2.jpg (26.4 KB ) - added by 7 weeks ago.

- bike-after.jpg (81.9 KB ) - added by 6 weeks ago.

- bike.jpg (245.3 KB ) - added by 6 weeks ago.

- bike.2.jpg (245.3 KB ) - added by 6 weeks ago.

- yoloxstream.jpg (148.1 KB ) - added by 6 weeks ago.

Download all attachments as: .zip