Media Controller

Modern System-on-Chip (SoC) devices support a wide range of functionality in the way of internal hardware blocks which has resulted in a variety of ways to interconnect functions within the SoC that alter the V4L device content.

The Media Controller kernel API has been designed to expose detailed information about media devices and capture devices to userspace in a way that allows them to be interconnected in dynamic and complex ways at runtime.

Media controller devices expose entities to the media controller framework. Each entity has one or more source pads and one or more sink pads. You use the media controller kernel API (ie via media-ctl) to query entities, link source pads to sink pads, and set formats of pads.

Note that the IMX6 media controller driver was added to Linux in the 4.13 kernel. This page applies to Linux 4.13 and beyond. If you are using a kernel earlier than that for the Ventana IMX6 based boards (such as the Gateworks 3.14 kernel) you will need to use the Yocto GStreamer pages:

- Yocto/gstreamer

- Yocto/gstreamer/compositing

- Yocto/gstreamer/latency

- Yocto/gstreamer/multimedia

- Yocto/gstreamer/streaming

- Yocto/gstreamer/video

media-ctl (v4l-utils package)

The media-ctl application from the v4l-utils package is a userspace application that uses the Linux Media Controller API to configure pipelines.

media-ctl has no manpage but looking at source:

- --device <dev> (/dev/media0 default)

- --entity <name> print the device name associated with given entity

- --set-v4l2 <v4l2> comma sep list of formats to setup

- --get-v4l2 <pad> print active format on a given pad

- --set-dv <pad> configure DV timings on a given pad

- --interactive modify links interactively

- --links <linux> comma sep list of link descriptors to setup

- --known-mbus-fmts list known formats and their numeric values

- --print-topology print device topology

- --reset reset all links to inactive

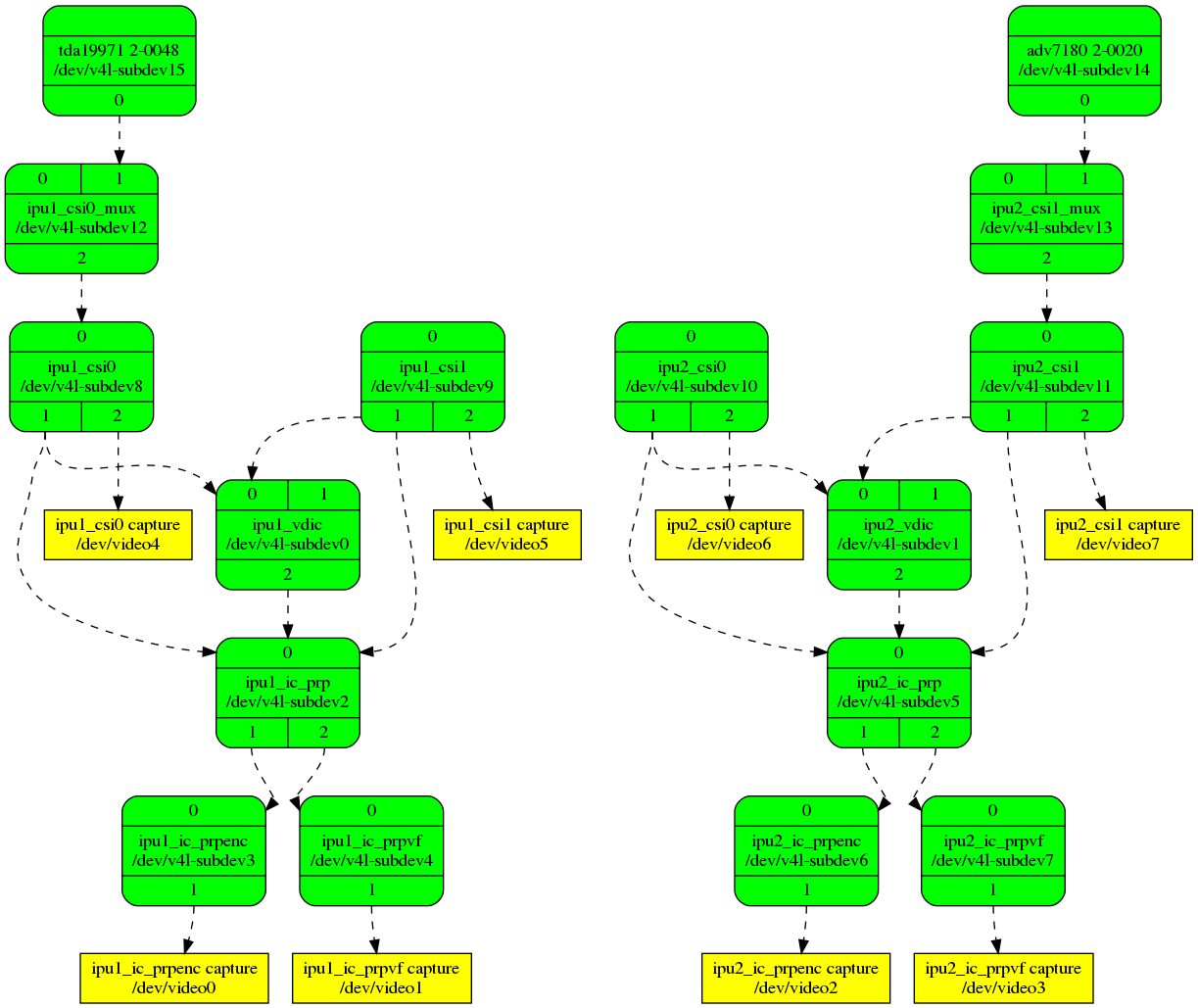

The best way to visualize what hardware components are available to interconnect is to use print the topology and convert it to a graphic image:

media-ctl --print-topology media-ctl --print-dot > graph.dot dot -Tpng graph.dot > graph.png

- dashed lines show possible connections; use these to determine the possibilities

- solid lines show active connections

- green boxes show media entities

- yellow boxes show video4linux endpoints

For example the following is the graph for a GW5404:

You can find graphs for the Video capture devices present on Various gateworks boards at http://dev.gateworks.com/docs/linux/media/:

Use media-ctl --links to link an entity source pad to an entity sink pad:

media-ctl --links "<entitya>:<srcpadn> -> <entityb>:<sinkpadn>[<flags>]

- flags: 0=inactive 1=active

The basic configuration steps for a hardware media pipeline are:

- reset all links with

media-ctl --reset - configure links with

media-ctl --links - configure pad formats with

media-ctl --set-v4l2 - configure subdev properties with

v4l2-ctl - capture frames on the

/dev/video*device

For more information on Video4Linux2 see here.

IMX6 Media Capture

The IMX media controller documentation in the Linux kernel is likely the best documentation to explain what's in the SoC and what each component can do:

The Freescale i.MX5/6 contains an Image Processing Unit (IPU), which handles the flow of image frames to and from capture devices and display devices.

For image capture, the IPU contains the following internal subunits:

- Image DMA Controller (IDMAC)

- Camera Serial Interface (CSI)

- Image Converter (IC)

- Sensor Multi-FIFO Controller (SMFC)

- Image Rotator (IRT)

- Video De-Interlacing or Combining Block (VDIC)

The IDMAC is the DMA controller for transfer of image frames to and from memory. Various dedicated DMA channels exist for both video capture and display paths. During transfer, the IDMAC is also capable of vertical image flip, 8x8 block transfer (see IRT description), pixel component re-ordering (for example UYVY to YUYV) within the same colorspace, and packed <--> planar conversion. The IDMAC can also perform a simple de-interlacing by interweaving even and odd lines during transfer (without motion compensation which requires the VDIC).

The CSI is the backend capture unit that interfaces directly with camera sensors over Parallel, BT.656/1120, and MIPI CSI-2 busses.

The IC handles color-space conversion, resizing (downscaling and upscaling), horizontal flip, and 90/270 degree rotation operations.

There are three independent "tasks" within the IC that can carry out conversions concurrently: pre-process encoding, pre-process viewfinder, and post-processing. Within each task, conversions are split into three sections: downsizing section, main section (upsizing, flip, colorspace conversion, and graphics plane combining), and rotation section.

The IPU time-shares the IC task operations. The time-slice granularity is one burst of eight pixels in the downsizing section, one image line in the main processing section, one image frame in the rotation section.

The SMFC is composed of four independent FIFOs that each can transfer captured frames from sensors directly to memory concurrently via four IDMAC channels.

The IRT carries out 90 and 270 degree image rotation operations. The rotation operation is carried out on 8x8 pixel blocks at a time. This operation is supported by the IDMAC which handles the 8x8 block transfer along with block reordering, in coordination with vertical flip.

The VDIC handles the conversion of interlaced video to progressive, with support for different motion compensation modes (low, medium, and high motion). The deinterlaced output frames from the VDIC can be sent to the IC pre-process viewfinder task for further conversions. The VDIC also contains a Combiner that combines two image planes, with alpha blending and color keying.

In addition to the IPU internal subunits, there are also two units outside the IPU that are also involved in video capture on i.MX:

- MIPI CSI-2 Receiver for camera sensors with the MIPI CSI-2 bus interface. This is a Synopsys DesignWare core.

- Two video multiplexers for selecting among multiple sensor inputs to send to a CSI.

These entities will differ depending on the processor: IMX6D/Q have two IPU's and IMX6S/DL has only one IPU therefore the number of entities and pads differ. Additionally certain entities will have fixed configuration based on device-tree endpoints. The adv7180 analog media controller driver also presents an entity as does the tda1997x HDMI receiver media controller driver.

References:

Kernel Drivers

The following kernel drivers need to be enabled for IMX6 capture:

- CONFIG_MEDIA_CONTROLLER=y - for media controller drivers and framework

- CONFIG_VIDEO_V4L2_SUBDEV_API=y - for V4L2 subdev framework

- CONFIG_VIDEO_IMX_MEDIA=y - for IMX6 media controller

- CONFIG_VIDEO_MUX=y - Video Multiplexer

- CONFIG_VIDEO_ADV7180=m - for Ventana Analog CVBS Video Capture (module)

- CONFIG_VIDEO_TDA1997X=m - for Ventana Digital HDMI Video Capture (module)

The kernel will display messages such as:

root@ventana:~# dmesg | grep media [ 0.343835] media: Linux media interface: v0.10 [ 6.189587] imx-media: Registered subdev ipu2_csi1_mux [ 6.195114] imx-media: Registered subdev ipu1_csi0_mux [ 6.200928] imx-media: Registered subdev ipu1_vdic [ 6.206251] imx-media: Registered subdev ipu2_vdic [ 6.211493] imx-media: Registered subdev ipu1_ic_prp [ 6.216580] imx-media: Registered subdev ipu1_ic_prpenc [ 6.230109] imx-media: Registered subdev ipu1_ic_prpvf [ 6.242937] imx-media: Registered subdev ipu2_ic_prp [ 6.248023] imx-media: Registered subdev ipu2_ic_prpenc [ 6.261065] imx-media: Registered subdev ipu2_ic_prpvf [ 6.273972] imx-media: Registered subdev ipu1_csi0 [ 6.292605] imx-media: Registered subdev ipu1_csi1 [ 6.304263] imx-media: Registered subdev ipu2_csi0 [ 6.315870] imx-media: Registered subdev ipu2_csi1 [ 6.327352] imx-media: imx_media_create_link: ipu1_csi0:1 -> ipu1_ic_prp:0 [ 6.334307] imx-media: imx_media_create_link: ipu1_csi0:1 -> ipu1_vdic:0 [ 6.341128] imx-media: imx_media_create_link: ipu1_csi0_mux:2 -> ipu1_csi0:0 [ 6.348200] imx-media: imx_media_create_link: ipu1_csi1:1 -> ipu1_ic_prp:0 [ 6.355253] imx-media: imx_media_create_link: ipu1_csi1:1 -> ipu1_vdic:0 [ 6.362026] imx-media: imx_media_create_link: ipu2_csi0:1 -> ipu2_ic_prp:0 [ 6.368924] imx-media: imx_media_create_link: ipu2_csi0:1 -> ipu2_vdic:0 [ 6.375717] imx-media: imx_media_create_link: ipu2_csi1:1 -> ipu2_ic_prp:0 [ 6.382661] imx-media: imx_media_create_link: ipu2_csi1:1 -> ipu2_vdic:0 [ 6.389419] imx-media: imx_media_create_link: ipu2_csi1_mux:2 -> ipu2_csi1:0 [ 6.396529] imx-media: imx_media_create_link: ipu1_vdic:2 -> ipu1_ic_prp:0 [ 6.403487] imx-media: imx_media_create_link: ipu1_ic_prp:1 -> ipu1_ic_prpenc:0 [ 6.410858] imx-media: imx_media_create_link: ipu1_ic_prp:2 -> ipu1_ic_prpvf:0 [ 6.418104] imx-media: imx_media_create_link: ipu2_vdic:2 -> ipu2_ic_prp:0 [ 6.425095] imx-media: imx_media_create_link: ipu2_ic_prp:1 -> ipu2_ic_prpenc:0 [ 6.432764] imx-media: imx_media_create_link: ipu2_ic_prp:2 -> ipu2_ic_prpvf:0

/dev/mediadevices are acted on by media controller framework - even though imx-media driver registers subdevs the/dev/mediadevice doesn't show up until the endpoint (adv7180, tda1997x) driver loads because that's the endpoint in devicetree (both endpoints have to exist before it shows up as available)

Media Controller Pipelines

Various pipelines of media controller entities are created by linking them together in a fashion that starts with a Linux v4l2 capture chip and ends with a Linux v4l2 capture node (ie /dev/video4).

The media pipelines you create will depend on:

- board model and CPU

- features and performance you are after

The Ventana baseboards have the following video capture capabilities:

- GW54xx:

- CVBS (Analog) video capture (ADV7180)

- HDMI (Digital) video capture (TDA1997X)

- GW551x:

- HDMI (Digital) video capture (TDA1997X)

- GW51xx/GW52xx/GW53xx/GW553x:

- CVBS (Analog) video capture (ADV7180)

The IPU and CSI port for each capture device depends not only on the board model but the IMX6 SoC variant as well.

The following table shows the various IPU/CSI combinations for each capture device per board and SoC:

| Board | SoC | ADV7180 Analog CVBS | TDA1997x Digital HDMI |

|---|---|---|---|

| GW5404 | IMX6Q | IPU2_CSI1 | IPU1_CSI0 |

| GW5304/GW5204 | IMX6Q | IPU2_CSI1 | N/A |

| GW5300/GW5200 | IMX6DL | IPU1_CSI1 | N/A |

| GW5104/GW5534 | IMX6Q | IPU1_CSI0 | N/A |

| GW5100/GW5530 | IMX6DL | IPU1_CSI0 | N/A |

| GW5514 | IMX6Q | N/A | IPU1_CSI0 |

| GW5510 | IMX6S | N/A | IPU1_CSI0 |

The pipelines you construct with media-ctl will differ depending on what you are trying to accomplish as different hardware blocks (translated to entities in the media controller graph) can perform different translations such as colorspace conversion, scaling, rotation, or de-interlacing. In some cases there are multiple ways to achieve a certain translation with different trade-offs.

The IMX6 media driver registers several entities that take advantage of the IMX6 hardware (See: Documentation/media/v4l-drivers/imx):

- ipu_csi_mux - IPU CSI Multiplexer: Multiplexes the parallel capture bus and the MIPI capture bus to the Camera Sensor Interface (CSI)

- ipu_csi - IPU Capture Sensor Interface (IDMAC) (latches parallel data on the bus, or de-serializes MIPI data from the bus). In addition to raw frame capture this supports:

- cropping using the crop selection subdev API

- fixed divide-by-two down-scaling independently in width and height using the compose selection subdev API

- frame skipping specified by setting the frame intervals at the sink and source pads

- simple de-interlacing without motion compensation (activated if the sink pad's field type is an interlaced type and the source pad field type is set to none)

- pixel reordering within the same colorspace: ie: UYVY2X8 -> YUYV2X8

- pixel plane conversion: YUV -> YUV420 (planar)

- frame interval monitor: For decoders such as the adv718x which occasionally send corrupt fields during NTSC/PAL signal re-sync (too little or too many video lines) the IPU can trigger a mechanism to re-establish vertical sync by adding a dummy line every frame causing a rolling effect from image to image or even a permanent split image. This mechanism can be used to detect corrupt fields and send a V4L2_EVENT_IMX_FRAME_INTERVAL_ERROR that can be caught to issue a restart to correct the rolling/split image. The ipu_csi subdev includes custom controls to configure this 'Frame Interval Monitor' or FIM. The V4L FIM controls are V4L2_CID_IMX_FIM_NUM_SKIP, V4L2_CID_IMX_FIM_ICAP_CHANNEL and V4L2_CID_IMX_FIM_ICAP_EDGE.

- ipu_vdic - Conversion of interlaced video to progressive with support for different motion compensation modes (low/med/high motion). The mode is specified with the V4L2_CID_DEINTERLACING_MODE menu control. This entity has a direct connection pad from the ipu_csi entity (in which only high motion mode can be used) but has an additional source pad that can be used with other output or mem2mem device nodes (where high, low, or medium motion mode can be used).

- ipu_ic_prp - IC pre-processing entity: acts as a router routing data between source and sink pads. This entity can source to either ipu_ic_prpenc or ipu_ic_prpvf if it is sinking from ipu1_vdic, or it can sink directly from ipu_csi and source both output pads at the same time.

- ipu_ic_prpenc - IC pre-processing encode entity provides:

- color-space conversion

- resizing (downscaling and upscaling)

- horizontal and vertical flip, and 90/270 degree rotation using the standard V4L2 controls

- simple de-interlace without motion compensation

- pixel reordering

- ipu_ic_prpvf - IC pre-process viewfinder entity: This is identical to the ipu_ic_prpenc entity supporting:

- color-space conversion

- horizontal and vertical flip, and 90/270 degree rotation using the standard V4L2 controls

- simple de-interlace without motion compensation (unless the ipu_vdic is included in the pipeline as the the de-interlacing has already been done by the vdic with motion compensation)

- pixel reordering

Ignoring the input and output pad numbering, and the IPU and CSI indices the following pseudo pipelines are useful:

- raw capture of input resolution:

sensor -> ipu_csi_mux -> ipu_csi -> ipu_csi capture

- use

tda19971source for digital HDMI andadv7180source for analog CVBS - use correct indices on ipu and csi above depending on board/soc/input (see above table)

- the IDMAC can be used for simple de-interlacing of interlaced video from the source by specifying a format with 'field:seq-tb' on the ipu_csi_mux and ipu_csi entities.

- use

- de-interlace via VDIC high motion compensation:

sensor -> ipu_csi_mux -> ipu_csi -> ipu_vdic -> ipu_ic_prp -> ipu_ic_prpvf -> ipu_ic_prpvf capture

- use

tda19971source for digital HDMI andadv7180source for analog CVBS - use correct indices on ipu and csi above depending on board/soc/input (see above table)

- specify 'field:interlaced' on the format for ipu_csi_mux, ipu_csi, ipu_vdic and 'field:none' on ipu_ic_prp and ipu2_ic_prpvf (because ipu_ic_prp is the entity that can deinterlace with the IDMAC)

- use

- IC direct conversions:

- uses preprocess encode entity to route frames directly from the CSI to the IC to carry out scaling up to 1024x1024 resolution, CSC, flipping, and image rotation:

sensor -> ipu_csi_mux -> ipu_csi -> ipu_ic_prp -> ipu_ic_prpenc -> ipu_ic_prpenc capture

- uses preprocess encode entity to route frames directly from the CSI to the IC to carry out scaling up to 1024x1024 resolution, CSC, flipping, and image rotation:

- Motion compensated de-interlace:

- routes frames from the CSI direct to the VDIC for motion-compensated (high mode) de-interlacing, scaling up to 1024x1024, CSC, flip, and rotation:

sensor -> ipu_csi_mux -> ipu_csi -> ipu_vdic -> ipu_ic_prp -> ipu_ic_prpvf -> ipu_ic_prpvf capture

- routes frames from the CSI direct to the VDIC for motion-compensated (high mode) de-interlacing, scaling up to 1024x1024, CSC, flip, and rotation:

Note that to aid in the configuration of and for backward compatibility with V4L2 applications that use controls the capture device interfaces inherit controls from the active entities in the current pipeline thus these controls can be accessed via the entity subdev or from the active capture device interface. For example the FIM controls are available from the ipu_csi subdevs or from the active capture device.

Media Controller Setup Script

Because the combinations of sensor, cpu, and board are plentiful we have created a way to create an appropriate pipeline configuration script that needs only to know what sensor you wish to use.

By default the script will create a pipeline customized for the particular sensor you are targeting (adv7180 for analog video decoder or tda1997x for HDMI decoder). However you can use the MODE env variable to alter the pipeline mode (see examples below).

Installation: The following scripts require GStreamer to be installed, see here for instructions.

The media-ctl-setup script is already installed on the Gateworks Ubuntu images however if you need to install it manually you can download it from the git repositoroy:

wget https://raw.githubusercontent.com/Gateworks/media-ctl-setup/master/media-ctl-setup chmod +x media-ctl-setup

Examples:

- Analog Video Decoder:

- CVBS NTSC raw capture:

v4l2-ctl --device /dev/v4l-subdev14 --set-standard NTSC MODE=0 ./media-ctl-setup adv7180 > setup source ./setup

- CVBS NTSC hardware de-interlaced capture:

v4l2-ctl --device /dev/v4l-subdev14 --set-standard NTSC ./media-ctl-setup adv7180 > setup source ./setup - CVBS PAL hardware de-interlaced capture

v4l2-ctl --device /dev/v4l-subdev14 --set-standard PAL ./media-ctl-setup adv7180 > setup source ./setup

- CVBS NTSC raw capture:

- Digital HDMI capture

- Note: These lines need to be ran each time a HDMI source / resolution is changed.

- Note: An EDID may need to be set before running the scripts (see #EDID )

./media-ctl-setup tda1997x > setup source ./setup- Example output for tda1997 on a 5.15 kernel:

root@jammy-ventana:~# source ./setup BT timings set DV timings: Active width: 1920 Active height: 1080 Total width: 2200 Total height: 1125 Frame format: progressive Polarities: +vsync +hsync Pixelclock: 148500000 Hz (60.00 frames per second) Horizontal frontporch: 88 Horizontal sync: 44 Horizontal backporch: 148 Vertical frontporch: 4 Vertical sync: 5 Vertical backporch: 36 Standards: CTA-861, DMT CTA-861 VIC: 16 Flags: framerate can be reduced by 1/1.001, CE-video, has CTA-861 VIC [fmt:UYVY8_1X16/1920x1080@1/30 field:none colorspace:rec709 xfer:709 ycbcr:709 quantization:lim-range] DEVICE=/dev/video4 ENCODER=/dev/video2 DECODER=/dev/video3 GST_CONVERT=v4l2convert

- Example output for tda1997 on a 5.15 kernel:

Note in the above examples we 'source' the setup script instead of executing it so that it can exports variables like the video capture device to the DEVICE env variable which can be used in further examples below.

Note that it is required that you re-run the media-ctl-setup script and its generated output commands whenever the input source changes (ie Analog NTSC->PAL or HDMI input format (resolution/colorspace/framerate).

Note that for many Analog decoder chips such as the adv7180 used on Ventana you must stop and re-start streaming if you lose or gain signal lock (ie Analog video input is disconnected and reconnected or simply connected after streaming starts). This is because many analog video decoders end up producing some incomplete frames right after lock and these frames must be discarded or they will confuse the IMX6. There is no support for discarding the first few frames in mainline linux at this time, but you can use the following patch which is included in Gateworks media kernel: https://github.com/Gateworks/linux-imx6/commit/fcab532

To detect when the input changes on a capture source you can catch the V4L2_EVENT_SOURCE_CHANGE event via the VIDIOC_DQEVENT ioctl - see https://www.linuxtv.org/downloads/v4l-dvb-apis-old/vidioc-dqevent.html

Analog Video Capture Sync and determining loss of signal/sync

A common issue when capturing analog video via the adv7180 sensor is that the Video does not synchronize properly if the signal is lost and re-acquired. This is because the nature of the adv7180 (and most analog video decoders) is that they start presenting decoded video pixel data before they see a vertical sync indicating the start of a frame. This will confuse the IMX6 capture and cause an image that either continually rolls vertically or appears split horizontally.

On stream startup the IMX6 capture driver will throw away the first few frames in order to avoid this problem via a patch in the Gateworks kernel.

However, if this issue is occurring because your analog video signal is intermittent you must detect the loss of sync and restart capture.

This issue appears to be more prevalent if you are using the IMX6 hardware de-interlacer. If you want to try capturing interlaced video to avoid this you can capture 1 field of the video (half height) and resize it to full height as follows:

# setup pipeline using MODE0 (raw) MODE=0 media-ctl-setup adv7180 > adv7180_raw . ./adv7180_raw # launch gstreamer gst-launch-1.0 v4l2src device=$DEVICE ! $GST_CONVERT ! video/x-raw,width=640,height=480 ! kmssink can-scale=false

If you are wanting to use the hardware de-interlacer you can detect the loss of sync in two possible ways using v4l2 event notifiers (available in 5.4+ kernel):

- via the adv7180 V4L2_EVENT_SOURCE_CHANGE event is supposed to fire when a source changes status

- via the imx6 capture driver 'Frame Interval Monitor' which works by using math to determine if it thinks frames are coming in at an inappropriate interval

The way you would use these notifiers is to use the VIDIOC_SUBSCRIBE_EVENT ioctl in an application to subscribe to the specific event you want. If you are using gst-launch to create a simple pipeline from userspace you can use the v4l2-ctl wait-for-event, poll-for-event, or --epoll-for-event parameters to wait for or check for an event meaning you would launch the pipeline in the background, wait for an event, then kill and restart the pipeline. I'll give you examples of using gst-launch.

For example to catch the V4L2_EVENT_SOURCE_CHANGE from the adv7180 via shell:

# setup pipeline media-ctl-setup adv7180 > adv7180_deinterlace . ./adv7180_deinterlace # launch gstreamer in background gst-launch-1.0 v4l2src device=$DEVICE ! $GST_CONVERT ! kmssink & # wait for a V4L2_EVENT_SOURCE_CHANGE event (id=1) v4l2-ctl --device=$DEVICE --wait-for-event=5 # now you can kill and restart gstreamer

The downside of this is that I the adv7180 does not consistently give you interrupts on signal loss and detection from our experience.

The other method is to use the IMX6 capture 'Frame Interval Monitor'. The downside to this is that it can take a bit of tuning and that it only is available on the ipu_csi capture device meaning you can't use the de-interlacer and will get only the even or odd lines (half height)

To use the FIM method you would do something like the following steps:

# setup pipeline media-ctl-setup adv7180 > adv7180_deinterlace . ./adv7180_deinterlace # enable frame interval monitor v4l2-ctl --device $DEVICE --set-ctrl=fim_enable=1 # you may need to adjust fim_num_average/fim_tolerance_min/fim_tolerance_max/fim_num_skip to your liking # launch gstreamer in background gst-launch-1.0 v4l2src device=$DEVICE ! $GST_CONVERT ! kmssink & # wait for a V4L2_EVENT_IMX_FRAME_INTERVAL_ERROR event (id=0x08000001) v4l2-ctl --device=$DEVICE --wait-for-event=0x08000001 # now you can kill and restart gstreamer

IMX6 IPU MEM2MEM (Hardware CSC/scale/crop/rotate/flip)

A Linux V4L2 MEM2MEM imx-media driver exists that allows utilizing the IMX6 IPU Image Converter hardware blocks (IC) to perform hardware colorspace conversion (CSC), scaling, cropping, rotation, and flip operations.

The GStreamer video4linux2 plugin provides an element that uses this driver to expose these capabilities to GStreamer applications.

Notes:

- for GStreamer-1.14 the name of the element depends on the video device the driver registers with the kernel (ie v4l2video8convert if mem2mem driver registers /dev/video8)

- for GStreamer master (in development) the name of the element is always 'v4l2videoconvert'

- the

kmssinkexamples below need acan-scale=falseproperty to tell GStreamer not to scale via the KMS driver (as the IMX6 KMS driver does not support scaling) - ensure that the input format differs from the output format otherwise GStreamer will bypass the conversion completely; note that GStreamer doesn't understand flipping or rotation as part of the format. Gstreamer master (in development) adds a 'disable-passthrough' property to the v4l2videoconvert entity that can be set to force the conversion regardless of input and output format

- when using imx entities (ie capture, encode/decode, mem2mem, display) you can specify 'output-io-mode=dmabuf-import' to share dmabuf pointers for a zero-copy pipeline however if using non imx entities (ie videotestsrc) you must omit these as you can not ensure the buffers share the alignment/stride necessary to share dmabuf pointers

Examples:

- Ensure mem2mem is in your kernel:

~# dmesg | grep mem2mem [ 18.356023] imx-media: Registered ipu_ic_pp mem2mem as /dev/video8

See Gstreamer examples below

IMX6 coda encode / decode driver

The Linux CODA driver provides access to the IMX6 hardware encode/decode codecs and the GStreamer video4linux2 plugin provides encode/decode elements that tap into this.

Examples:

- Ensure CODA is in your kernel:

~# dmesg | grep coda [ 16.721698] coda 2040000.vpu: Direct firmware load for vpu_fw_imx6q.bin failed with error -2 [ 16.721724] coda 2040000.vpu: Falling back to syfs fallback for: vpu_fw_imx6q.bin [ 18.381136] coda 2040000.vpu: Using fallback firmware vpu/vpu_fw_imx6q.bin [ 18.433648] coda 2040000.vpu: Firmware code revision: 570363 [ 18.433683] coda 2040000.vpu: Initialized CODA960. [ 18.433706] coda 2040000.vpu: Firmware version: 3.1.1 [ 18.442312] coda 2040000.vpu: codec registered as /dev/video[9-10]

See Gstreamer examples below

Capture examples

With the assumption that you have already configured your capture device (see above) and set it to the DEVICE env variable the following examples are useful examples for capture and streaming:

Examples:

- basic raw frame capture with

v4l2-ctl:# capture 1 frame v4l2-ctl --device /dev/video4 --stream-mmap --stream-to=x.raw --stream-count=1 # convert with imagemagick (assumes you captured a 480p YUV frame) convert -size 720x480 -depth 16 uyvy:x.raw frame.png

GStreamer Capture, Dispaly, and Stream Examples

With the assumption that you have already configured your capture device (see above) and set it to the DEVICE env variable the following examples are useful examples for capture and streaming using GStreamer. For more explicit examples see our GStreamer page.

First make sure you have gstreamer installed. You can pick and choose what you want but the following will install pretty much everything on an Ubuntu system:

# install gstreamer:

apt-get install libgstreamer1.0-0 gstreamer1.0-plugins-base gstreamer1.0-plugins-good gstreamer1.0-plugins-bad gstreamer1.0-plugins-ugly gstreamer1.0-libav gstreamer1.0-doc gstreamer1.0-tools gstreamer1.0-x gstreamer1.0-alsa gstreamer1.0-gl gstreamer1.0-gtk3 gstreamer1.0-qt5 gstreamer1.0-pulseaudio

Capture / Display

- Display capture source:

# display kmssink gst-launch-1.0 v4l2src device=$DEVICE ! kmssink # display fbdevsink gst-launch-1.0 v4l2src device=$DEVICE ! v4l2video10convert output-io-mode=dmabuf-import ! fbdevsink

Capture / Encode

- Ensure GStreamer encode elements exists:

~# gst-inspect-1.0 | grep -e "v4l2.*enc" video4linux2: v4l2h264enc: V4L2 H.264 Encoder video4linux2: v4l2mpeg4enc: V4L2 MPEG4 Encoder

- Encode to JPEG (software based encode) and stream via RTP/UDP:

# stream JPEG/RTP/UDP gst-launch-1.0 v4l2src device=$DEVICE ! jpegenc ! rtpjpegpay ! udpsink host=$SERVER port=$PORT # client on $SERVER:$PORT could be viewing via 'gst-launch-1.0 udpsrc port=5000 ! application/x-rtp,payload=96 ! rtpjpegdepay ! jpegdec ! autovideosink'

- Encode to H264 (hardware based encode) and stream via RTP/UDP:

# stream H264/RTP/UDP gst-launch-1.0 v4l2src device=$DEVICE ! \ v4l2video10convert output-io-mode=dmabuf-import ! \ v4l2h264enc output-io-mode=dmabuf-import ! \ rtph264pay ! udpsink host=$SERVER port=$PORT # client on $SERVER:$PORT could be viewing via 'gst-launch-1.0 udpsrc port=5001 caps=application/x-rtp,payload=96 ! rtph264depay ! decodebin ! autovideosink'

Stream

- "gst-cariable-rtsp-server" is the recommended method to use for streaming video, you can download it from our PPA.

add-apt-repository ppa:gateworks-software/packages apt-get install gst-gateworks-apps

- With this installed test with the following commands:

#on SBC gst-variable-rtsp-server -p 9001 -u "videotestsrc ! jpegenc ! rtpjpegpay name=pay0 pt=96" #In this example a test source is used, this can be any source. Other encoding methods can be used as well.

#on Workstation gst-launch-1.0 rtspsrc location=rtsp://192.168.1.1:9001/stream latency=10 ! decodebin ! autovideosink

- Note: gst-variable-rtsp-server will display the IP 127.0.0.1 when it is launched, this IP is a hard-coded into a print statement in the program and is totally arbitrary—ignore it.

Convert

The GStreamer video4linux2 plugin provides an element that uses this driver to expose these capabilities to GStreamer applications.

Notes:

- for GStreamer-1.14 the name of the element depends on the video device the driver registers with the kernel (ie v4l2video8convert if mem2mem driver registers /dev/video8)

- for GStreamer master (in development) the name of the element is always 'v4l2videoconvert'

- the

kmssinkexamples below need acan-scale=falseproperty to tell GStreamer not to scale via the KMS driver (as the IMX6 KMS driver does not support scaling) - ensure that the input format differs from the output format otherwise GStreamer will bypass the conversion completely; note that GStreamer doesn't understand flipping or rotation as part of the format. Gstreamer master (in development) adds a 'disable-passthrough' property to the v4l2videoconvert entity that can be set to force the conversion regardless of input and output format

- when using imx entities (ie capture, encode/decode, mem2mem, display) you can specify 'output-io-mode=dmabuf-import' to share dmabuf pointers for a zero-copy pipeline however if using non imx entities (ie videotestsrc) you must omit these as you can not ensure the buffers share the alignment/stride necessary to share dmabuf pointers

Examples:

- Ensure mem2mem is in your kernel:

~# dmesg | grep mem2mem [ 18.356023] imx-media: Registered ipu_ic_pp mem2mem as /dev/video8

- Ensure GStreamer element exists:

~# gst-inspect-1.0 | grep -e "v4l2.*convert" video4linux2: v4l2video8convert: V4L2 Video Converter

- Note that for GStreamer-1.14, the name of the element depends on the video device the driver registers with the kernel (video8 in the above example). This changes in GStreamer-1.16 to always be 'v4l2videoconvert'

- scale/rotate/flip using

videotestsrc(can not use dmabufs for this as it is a non-imx entity)# upscale gst-launch-1.0 videotestsrc ! video/x-raw,width=320,height=240 ! \ v4l2video8convert ! \ video/x-raw,width=640,height=480 ! kmssink can-scale=false # downscale gst-launch-1.0 videotestsrc ! video/x-raw,width=640,height=480 ! \ v4l2video8convert ! \ video/x-raw,width=320,height=240 ! kmssink can-scale=false # rotate gst-launch-1.0 videotestsrc ! video/x-raw,width=320,height=240 ! \ v4l2video8convert extra-controls=cid,rotate=90 ! \ video/x-raw,width=240,height=320 ! kmssink can-scale=false # hflip gst-launch-1.0 videotestsrc ! video/x-raw,width=320,height=240 ! \ v4l2video8convert extra-controls=cid,horizontal_flip=1 ! \ video/x-raw,width=640,height=480 ! kmssink can-scale=false # vflip gst-launch-1.0 videotestsrc ! video/x-raw,width=320,height=240 ! \ v4l2video8convert extra-controls=cid,vertical_flip=1 ! \ video/x-raw,width=640,height=480 ! kmssink can-scale=false

- note the above examples force the input format (resolution in this case) to differ from the output format otherwise gstreamer will bypass the v4l2convert entity thinking it not necessary as gstreamer does not understand the flip/rotation properties. GStreamer master (in development) adds the 'disable-passthrough' property which can be enabled to force disabling passthrough

- scale/rotate/flip using imx-media capture device and KMS display driver (can use dmabufs for this as they are all imx hardware entitites):

# scale sensor input to 720p display gst-launch-1.0 v4l2src device=$DEVICE ! \ v4l2video8convert output-io-mode=dmabuf-import ! \ video/x-raw,width=1280,height=720 ! \ kmssink can-scale=false # scale sensor input to 1080p display gst-launch-1.0 v4l2src device=$DEVICE ! \ v4l2video8convert output-io-mode=dmabuf-import ! \ video/x-raw,width=1920,height=1080 ! \ kmssink can-scale=false # scale/flip gst-launch-1.0 v4l2src device=$DEVICE ! \ v4l2video8convert output-io-mode=dmabuf-import extra-controls=cid,horizontal_flip=1 ! \ video/x-raw,width=1920,height=1080 ! \ kmssink can-scale=false # scale/rotate gst-launch-1.0 v4l2src device=$DEVICE ! \ v4l2video8convert output-io-mode=dmabuf-import extra-controls=cid,rotate=90 ! \ video/x-raw,width=720,height=1280 ! \ kmssink can-scale=false

- capture, scale, rotate, flip and encode using imx-media capture device mem2mem device and coda device (can use dmabufs for zero-copy)

# encode gst-launch-1.0 v4l2src device=$DEVICE ! \ v4l2video8convert output-io-mode=dmabuf-import ! \ v4l2h264enc output-io-mode=dmabuf-import ! \ rtph264pay ! udpsink host=$SERVER port=$PORT # scale/encode gst-launch-1.0 v4l2src device=$DEVICE ! \ v4l2video8convert output-io-mode=dmabuf-import ! \ video/x-raw,width=1440,height=960 ! \ v4l2h264enc output-io-mode=dmabuf-import ! \ rtph264pay ! udpsink host=$SERVER port=$PORT # scale/flip/encode gst-launch-1.0 v4l2src device=$DEVICE ! \ v4l2video8convert output-io-mode=dmabuf-import extra-controls=cid,horizontal_flip=1 ! \ video/x-raw,width=1440,height=960 ! \ v4l2h264enc output-io-mode=dmabuf-import ! \ rtph264pay ! udpsink host=$SERVER port=$PORT # scale/rotate/encode gst-launch-1.0 v4l2src device=$DEVICE ! \ v4l2video8convert output-io-mode=dmabuf-import extra-controls=cid,rotate=90 ! \ video/x-raw,width=1440,height=960 ! \ v4l2h264enc output-io-mode=dmabuf-import ! \ rtph264pay ! udpsink host=$SERVER port=$PORT

EDID

Display Devices (HDMI sink)

In order to understand details about your display you can use the following:

# show kernel command-line to see what video params were passed cat /proc/cmdline # show HDMI monitor supported resolutions cat /sys/class/drm/card1-HDMI-A-1/modes # show current mode cat /sys/class/graphics/fb0/modes # read/parse EDID get-edid | parse-edid

Capture Devices (HDMI source)

HDMI sources require an EDID from the HDMI sink (ie monitor/capture device) to know what formats are allowed. This is done on v4l2 capture devices such as the tda1997x by using the 'v4l2-ctl --set-edid' on the subdev for the capture device. The capture device will then provide this to the HDMI source (it wiggles its HPD line which is the equivalent of a hotplug) and then the HDMI source will provide the sink (capture device) with something compatible which you can then query. You can provide either a raw EDID binary file or a type which will cause the kernel to auto-generate a compatible EDID from.

Examples:

- show options for setting edid:

v4l2-ctl --help-edid

- provide an EDID to the tda1997x by description:

v4l2-ctl -d $(media-ctl -e "tda19971 2-0048") --set-edid=type=hdmi

- provide an EDID by binary file

v4l2-ctl -d $(media-ctl -e "tda19971 2-0048") --set-edid=file=edid.hex # hex v4l2-ctl -d $(media-ctl -e "tda19971 2-0048") --set-edid=file=edid.bin,format=raw # binary

- clear the EDID:

v4l2-ctl -d $(media-ctl -e "tda19971 2-0048") --clear-edid

- get edid

# show it in hex format v4l2-ctl -d $(media-ctl -e "tda19971 2-0048") --get-edid=format=hex # save it to a file v4l2-ctl -d $(media-ctl -e "tda19971 2-0048") --get-edid pad=0,file=edid.hex,format=hex # hex v4l2-ctl -d $(media-ctl -e "tda19971 2-0048") --get-edid file=edid.bin,format=raw # binary

Notes:

- the above commands do not provide the optional 'pad' argument as it defaults to pad 0 which is the only pad of the tda1997x